Research Article

A Melanoma Diagnosis Using Dermoscopy Images Utilizing Convolutional Neural Networks

- Archishma Marrapu *

Thomas Jefferson High School for Science and Technology, VA, United States.

*Corresponding Author: Archishma Marrapu, Thomas Jefferson High School for Science and Technology, VA, United States.

Citation: Archishma Marrapu. (2024). A Melanoma Diagnosis Using Dermoscopy Images Utilizing Convolutional Neural Networks. Clinical Case Reports and Studies, BioRes Scientia Publishers. 5(4):1-7. DOI: 10.59657/2837-2565.brs.24.122

Copyright: © 2024 Archishma Marrapu, this is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Received: March 15, 2024 | Accepted: March 29, 2024 | Published: April 05, 2024

Abstract

Introduction: Melanoma is a type of skin cancer, the most dangerous one with over 200,000 new cases in the United States annually.

Background: Melanoma can affect a wide range of people and can be affected by different risk factors, although the exact cause is unknown. There are several diagnosis methods currently used by doctors in a traditional method of diagnosis. These methods of diagnosis come with different disadvantages and drawbacks, therefore, decreasing the accuracy and effectiveness of the diagnosis. The drawbacks that occur with the traditional, manual diagnosis are misdiagnosis of melanoma, low accuracy in diagnosis, the abundance of time, and human errors. The automation of melanoma diagnosis can benefit many people, both doctors, and patients, in several different ways.

Methods: The usage of new technologies, such as Artificial Intelligence, can increase the effectiveness and accuracy of diagnosis. This paper proposes an Artificial Intelligence and Deep Learning model that diagnoses melanoma with more accuracy and less time at an early stage. It is essentially a mobile application that can be integrated with a handheld dermatoscope to capture dermoscopy images to diagnose melanoma using the pre-trained Machine Learning model. The pre-trained model uses several images in order to produce accurate and effective results.

Results: The model has a 99% accuracy rate and can produce results of the diagnosis within a few seconds of time. Conclusions: This method of diagnosis can eliminate manual errors in diagnosis and can also reduce the several weeks of wait time needed for manual melanoma diagnosis results.

Keywords: cancer; skin cancer; carcinoma

Introduction

The most common type of cancer in the United States is skin cancer [1]. For every three cancer diagnoses in the world, one cancer diagnosis is for skin cancer [2]. Skin cancer is divided into three major types; basal cell cancer, melanoma, and squamous cell carcinoma, based on their location within the skin [3]. Melanoma is the most dangerous type of skin cancer, causing the greatest number of deaths [4].

Researchers and scientists do not know the exact cause of melanoma, but there are multiple risk factors involved. Age, gender, race, ethnicity, and external habits can be risk factors of melanoma [5]. Usually, women under the age of forty are more at risk of developing melanoma [1]. Exposure to ultraviolet radiation can play a major role in the development of melanoma. Ultraviolet radiation causes the melanocytes to produce more melanin, altering the color of the skin [6]. UV rays increase the number of Melanin Stimulating Hormone (MSH), specifically the alpha MSH, a vital component in maintaining immunity and inflammation [7]. This alpha MSH binds to Melanocortin 1 Receptor (MC1R) in order to produce melanin, C18H10N2O4 [8]. Genetics and heredity contribute to melanoma formation. 10% of all melanoma patients have a family history [1]. The most common genetic mutations that cause melanoma occur in the BRAF oncogene, which essentially controls the growth of the cell and alters proteins in order to regulate cell growth [9]. A mutation in the BRAF gene cannot be inherited and is most commonly found in the younger population [9]. The BRAF gene, found on chromosome 7q34, codes for the serine/threonine protein kinase, an enzyme that programs cell death and cell growth [9]. BRAF is a 2949 base pair sequence that codes for 766 amino acid peptides and is located on the 18th exon [9]. There are two types of BRAF gene mutations, V600E and the non-V600E [9]. The V600E mutation is caused by the presence of glutamic acid where valine should be [9]. The non-V600E is a mutation that mainly occurs in smokers and can also lead to melanoma [9]. The NRAS, CDKN2A, and NF1 genes can affect the development of melanoma whereas the BAP1 gene can increase the risk of someone getting melanoma [10].

Melanoma has different methods of diagnosis and treatment in the medical field. Although melanoma is diagnosed using different types of biopsies like shave biopsy, punch biopsy, excisional biopsy, incisional biopsy, and optical biopsy [11]. After melanoma is diagnosed, different scans, such as CT, MRI, PET, and x-rays, are used to identify if the melanoma has metastasized or spread to other parts of the body [11].

Problem Statement

The diagnosis process for melanoma takes quite a bit of time. After undergoing the biopsy, the patient must wait for two to three weeks in order to get back results. During this time, the cancer cells might grow or metastasize. There are also various issues that come with the manual diagnosis. These include human errors, misdiagnosis, low accuracy, and an abundance of time. Because misdiagnosis of melanoma is quite common, doctors recommend patients get a second doctor to conduct tests and verify results. This essentially adds another two weeks before having an accurate diagnosis. This results in a total time span of six weeks before the patient receives an accurate diagnosis. In this period of time, the melanoma can progress and grow. The automation of melanoma diagnosis can help eliminate these human errors and provide more accurate results in less time.

Related Work

There have been many studies and pieces of literature that explore new ideas in the automation of melanoma diagnosis and about the utilization of different technologies for melanoma. Various universities and companies, such as WebMD, Massachusetts Institute of Technology, and Stanford University, have created multiple technological solutions and explored the present tools.

In a WebMD study, the usage of Artificial Intelligence in skin cancer diagnosis, specifically pigmented cancerous tissues, is explored. This is possible due to the colors and boundaries of the cancerous tissues. One technology that is inspected is MelaFind, a tool that uses infrared imaging in order to provide more information about the melanoma tissue. Another solution that is analyzed is an app, SkinVision. This app allows a picture of the skin to be taken and then is assessed. The study also states that neither of the technologies listed are accurate enough to be used independently; they always require a manual diagnosis method, most likely a biopsy, to be done as well [12].

Another piece of research from the Massachusetts Institute of Technology investigates the usage of Deep Convolutional Neural Networks (DCNN) to monitor or detect suspicious pigmented lesions (SPLs). This technology categorizes the results into two colors, yellow meaning further inspection should be considered, and red meaning further inspection is recommended. Although technology is being used to enhance the diagnosis, there are still a myriad of flaws such as no direct detection, lack of accessibility, low accuracy rates, and human error/intervention [13].

Methods

The methodology consists of three major components: Deep Learning model, the dermatoscope, and the mobile application. The deep learning model includes: Data preparation, data augmentation, pre-processing, weighted training, feature extraction, classification and prediction.

Data Preparation

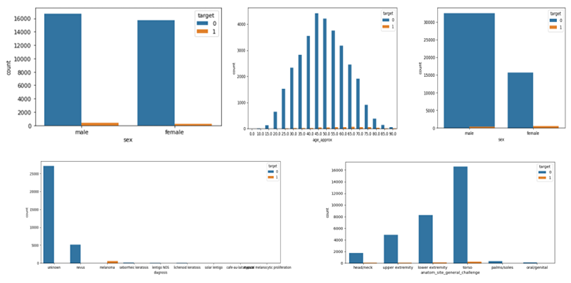

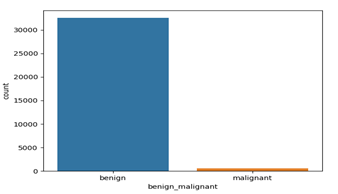

The dermoscopic datasets were obtained from the ISIC 2016, ISIC 2017, and 2020 challenges [14]. The data is well segmented based on the multiple attributes like gender, age, anatomical location, types of nonmelanoma as shown in figures 1. The data segmentation between benign (noncancerous tissues) and malignant (cancerous tissues) is 98% to 2% which (shown in figure 2 consists of 33126 benign images and 584 images are malignant. The dataset is highly imbalanced that could yield to the overfitting of the model or biased training of the model. Lewis lung carcinoma is a hypermutated Kras/Nras–mutant cancer with extensive regional mutation clusters in its genome. A tumor that spontaneously developed as an epidermoid carcinoma in the lung of a C57BL mouse. Syngeneic models have proven to be useful in predicting clinical benefit of therapy in preclinical experiments. The cells were anaplastic, varying in size and shape; and they appeared to have little cytoplasm. The nuclei of the cells were highly distorted and prominent.

Figure: Data Segmentation.

Figure 1: Benign and Malignant Data segmentation

The dataset of images was divided into training, validation, and testing, where 82% of the images were used to train the algorithm and 18% were used for validation. The separate test dataset was prepared in which 10000 unseen images were utilized to evaluate the performance of the algorithm.

Data Augmentation

As the original dataset contains only 584 malignant images which is less than 2 percent of the benign images makes a highly imbalanced data that will yield biased results. The High-performance deep networks require large datasets. We have applied different image argumentation techniques. The images were rotated to different angles, resized with different sizes, flipped horizontally, vertically, up and down to artificially increase the number of images. The images are rotated with different angles of 90, 180 and 270 degrees. The images are also flipped vertically, horizontally, up and down. Each image is transformed to add six more images. Table 1 summarizes the details of the original dataset and the augmented dataset.

Table 1: Dataset after augmentation.

| Dataset | Total Images | Benign | Malignant |

| ISIC Images | 33710 | 33126 | 584 |

| Augmented | 202260 | 198756 | 3504 |

Pre-processing

Pre-processing is a vital step of detection in order to remove noises and enhance the quality of the original image. It is required to be applied to limit the search of abnormalities in the background influence on the result. The main purpose of this step is to improve the quality of melanoma image by removing unrelated and surplus parts in the background of the image for further processing. Image scaling, enhanced color and image restoration techniques have been employed in the pre-processing.

Model

The model is an extension of the Xception model with 13-layer Convolutional Neural Network model to achieve high accuracy rates. The product utilizes transfer learning techniques by leveraging an Xception model, which is pre-trained by two million images.This model is chosen based on studies shown that Xception model is higher performant than the Inception, VGG-16,and ResNet-152.

Table 2: Comparison of Models

| Top-1 accuracy | Top-5 accuracy | |

| VGG-16 | 0.715 | 0.901 |

| ResNet-152 | 0.770 | 0.933 |

| Inception V3 | 0.782 | 0.941 |

| Xception | 0.790 | 0.945 |

The model takes the input image size of 256 x 256 x 3, so all the images were preprocessed to resize and fit into the input.

Xception Model Architecture

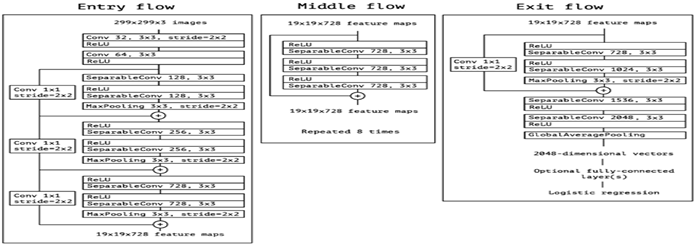

The Xception CNN model is a deep learning model that uses Depth Wise Separable Convolutions, which is based on the Inception model not only to look at the two-dimensional aspect but also look at the depth of the image. The architecture of the model is shown in figure 3. The data flow starts from the entry flow which has repeated Convulsion, linear regression and max pooling layers. The results of the entry flow will be passed as input to the Middle Flow which consists of linear regression and Separable convulsions and repeats for eight times. The output of the middle flow will be transferred as input to the Exit flow with linear regression and separable convolution layers, All the separable convolution layers are followed by batch normalization.

Training

The model is trained using 33000 of original images and two million images including the augmented images. Due to the imbalance of the dataset the model is over sampling leading to biased predictions towards the large population. Inorder to eliminate this, the model has been trained using the separate weights for benign (0.5) and malignant (28). The weights are calculated as shown below.

Weights for benign=  x

x

weights for malignant=  x

x

The training has been done using optimal hyperparameters with batch size of 32 and fifty epochs, or iterations. The learning rate uses an optimization algorithm that estimates the error rate of the model and then balances the weights.

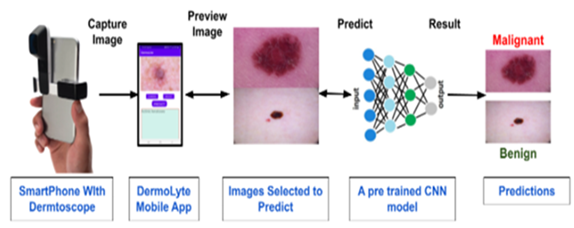

Mobile Application

The mobile application DermLyte was developed using Java language and Android Studio. The mobile application serves as the user interface that allows the user to capture an image of the lesion and to provide results. The mobile application is integrated with a dermatoscope via an adapter. The mobile application in figure has multiple features; capture image, select image, image preview, and an output area. The capture image feature activates the camera and captures the image using the attached dermatoscope.

Figure 3: Architecture of Xception Model

The select image feature interns allows to choose the already captured image from the gallery. Once the user chooses an image or captures an image, the image preview will be displayed in the preview box. Once the user is satisfied with the image, they may select the predict button and the diagnosis and accuracy will be printed in the output area.

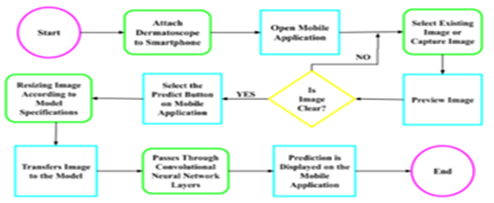

Product Procedure

In order to use this product, the user must have a handheld dermatoscope that is capable of binding with the smartphone camera. This binding is done via an adapter. Once the dermatoscope is secured, the app is ready to capture the image of the liaison. Once the user installs and opens the DermLyte application figure, he can choose the option to select the existing image from the gallery or choose to capture the image feature. Based on the selection the user is taken to the camera or gallery to capture or select the image. Once the user selects or captures an image, a preview will be shown and the user has the option to retake or reselect an image if it is not clear. If the user is satisfied with the image, the user can use the predict button that will submit the image for preprocessing to resize according to the model specifications and then transferred into the model.

Figure 4: Application Component Integration Flow.

Figure 5: Process Flow diagram

The image passes through the Convolutional Neural Network layers and the prediction will appear on the mobile application. The flow has been depicted in the following figures.

References

- Skin Cancer Facts & Statistics (2020). skincancer.org.

Publisher | Google Scholor - WHO. (2022). Radiation: Ultraviolet (UV) radiation and skin cancer.

Publisher | Google Scholor - Skin Cancer. (2020). WebMD.

Publisher | Google Scholor - (2022). What is Melanoma? (n.d.). Aim at Melanoma Foundation.

Publisher | Google Scholor - (2020). Cancer.Net. Melanoma: Risk Factors and Prevention.

Publisher | Google Scholor - Brenner, M., & Hearing, V. J. (2007). The protective role of melanin against UV damage in human skin. Photochemistry and Photobiology, 84(3):539-549.

Publisher | Google Scholor - Böhm, M., Wolff, I., Scholzen, T. E., Robinson, S. J., Healy, E., et al. (2005). α-Melanocyte-stimulating hormone protects from ultraviolet radiation-induced apoptosis and DNA damage. Journal of Biological Chemistry, 280(7):5795-5802.

Publisher | Google Scholor - Wolf horrell, E. M., Boulanger, M. C., & D'orazio, J. A. (2016). Melanocortin 1 receptor: Structure, function, and regulation. Frontiers in Genetics, 7.

Publisher | Google Scholor - Shiau, C.j., & Tsao, M.-S. (2017). Molecular testing in lung cancer. Diagnostic Molecular Pathology, 287-303.

Publisher | Google Scholor - Horak, V., Palanova, A., Cizkova, J., Miltrova, V., Vodicka, P., & Kupcova skalnikova, H. (2019). Melanoma-Bearing libechov minipig (MeLiM): The unique swine model of hereditary etastatic melanoma. Genes, 10(11):915.

Publisher | Google Scholor - Tests for Melanoma Skin Cancer. (2019). Cancer.org.

Publisher | Google Scholor - Robinson, K. M. (2019). How Artificial Intelligence Helps Diagnose Skin Cancer (M. Tareen, Ed.). WebMD.

Publisher | Google Scholor - Lewis, M. (2021). An artificial intelligence tool that can help detect melanoma. Massachusetts Institute of Technology.

Publisher | Google Scholor - SIIM-ISIC Melanoma Classification. (2020). Kaggle.

Publisher | Google Scholor - Melanoma. (n.d.). (2022). Mayo Clinic.

Publisher | Google Scholor