Review Article

Investigating the Performance of Unacceptable Results in Proficiency Testing Among Medical Laboratories

1Raidx Medical Laboratory, Yanbu St, Dhahrat Laban Dist. Riyadh Saudia Arabia.

2Alzaiem Alazhari University-faculty of medical laboratory science- Clinical Biochemistry Department, Sudan.

3Clinical Laboratories Sciences Department, College of Applied Medical Sciences, AlJouf University, Saudi Arabia.

*Corresponding Author: Abdalla Eltoum Ali, Raidx Medical Laboratory, Yanbu St, Dhahrat Laban Dist. Riyadh Saudia Arabia.

Citation: Ali A.E., Hassan T., Elamim M., Hamza A.M. (2024). Investigating the Performance of Unacceptable Results in Proficiency Testing Among Medical Laboratories, Journal of BioMed Research and Reports, BioRes Scientia Publishers. 5(6):1-18. DOI: 10.59657/2837-4681.brs.24.118

Copyright: © 2024 Abdalla Eltoum Ali, this is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Received: December 05, 2024 | Accepted: December 18, 2024 | Published: December 26, 2024

Abstract

Proficiency testing (PT) is crucial to ensuring the quality and accuracy of medical laboratory testing. This review article investigates the performance of unacceptable results in PT among medical laboratories. Unacceptable PT results indicate potential issues in laboratory performance, which can have significant implications for patient care. This review examines the factors contributing to unacceptable PT results, including pre-analytical, analytical, and post-analytical errors. It also explores the impact of unacceptable results on laboratory quality management and patient outcomes. Strategies for reducing unacceptable PT results and improving overall laboratory performance are discussed. Understanding and addressing the factors contributing to unacceptable PT results are essential for maintaining high laboratory standards and ensuring the reliability of laboratory test results.

Keywords: proficiency testing; unacceptable results; laboratory errors

Introduction

When a laboratory announces a PT scheme, they commonly feel confident and even relaxed when preparing their systems and starting testing samples. Some laboratories may struggle to meet deadlines and rush sample examinations due to current workloads and time constraints. After obtaining their PT scores, it could be quite a shock for those confident to learn that their test results were not as accurate as they thought. This can then lead to actions on how and why mistakes were made and what systems can be changed to correct these mistakes. PT is a learning experience for medical laboratory scientists; often, it is from mistakes that a person can learn the most. PT results allow a laboratory to see what mistakes were made and which analytes. Mistakes can range from simple clerical errors to problems with sample preparation and analyte testing [1-3]. PT samples provide an alternative to checking test results' accuracy, compared to patient samples, which is a far riskier and less ethical practice. PT mistakes should be investigated so changes can be made to improve and prevent similar mistakes from being made in the future. PT programs can also often identify a minority of laboratories providing unreliable test results. This could be due to financial constraints and a lack of resources or a lack of qualified staff and training. In turn, it may be in the best interests of these laboratories to reconsider whether they should continue testing these analytes and even consider sending samples to a more reliable external source [1,3].

A proficiency testing (PT) program is a tool used to assess the quality of laboratory test results by comparing them to a "gold standard." This is done to monitor the ongoing capability of a laboratory to deliver a valid result and to detect any changes to the systems being used. In clinical chemistry, a PT program simulates patient samples, which can be either internal (samples are tested on-site) or external (samples are sent to another laboratory). Accurate and reliable reporting of patient test results is essential for modern healthcare. Any errors made during clinical testing can result in misdiagnosis and incorrect treatments and jeopardize patient safety. Therefore, medical laboratory scientists need to participate in PT programs to ensure their test results are reliable and that any mistakes can be identified and corrected [1,3,4].

Importance of Proficiency Testing

The importance of PT has become evident to government bodies, which now use PT participation to determine eligibility for participation in health care schemes. PT has also been endorsed by the European Union's Directive on using standards in the pursuit of standardization to assist laboratories in demonstrating competence because accreditation alone does not guarantee quality. Therefore, many laboratories in various countries may be at different stages of implementing PT. PT is also essential for clinical trials and studies since data from variably competent laboratories can be misleading and sometimes is rejected by journals as an acceptable post for publication. PT is the preferable alternative to using samples from the general population or samples that have been artificially manipulated to contain abnormal results [1-3,5].

Proficiency testing (PT) is a system used to evaluate individual laboratories' work quality for specific tests or measurements and is widely used in clinical laboratories. It can be considered a valuable tool to reinforce quality assurance and cost-effective quality improvement. Any PT program compares a laboratory's performance with similar laboratories, regionally or nationally, and provides an educational experience to improve that performance. PT should not only be used to monitor ongoing competence but also to identify specific areas in need of further improvement [1,3].

Poor Performance

The definitions used vary; for example, Power (1996) defines a poor performer as a laboratory that fails to produce a result within defined standards. He recommended a percentage of satisfactory results using a z score (between -2 and 2) of 65% and 80%. Failure to achieve this was termed insufficient reliability. Blanket al. (2002) defined it as a result significantly different from the peer group consensus value. This definition has logical appeal and is based upon the premise in the Analytical Hierarchy that certain tests are so vital that the wrong result is worse than no result. Other researchers have measured performance in terms of categorical classification of results (Stableford et al., 2000) and diagnostic sensitivity (Shrader et al., 2004). Much research is not directly comparable because PP was studied concerning one method or disease-specific testing. PP is relevant to the on-site analytical process and results production; pre- and post-analytical influences may completely mask an error in the analytic phase. 6 This definition is good in that it highlights the fact that poor performance affects patient results and can also be epitomized by instances where no result is worse than an incorrect one. The extent to which peer group performance is attained or error in a single test can be identified has informed the PT pilot study reported in this thesis and thus is closely related to the definition. The most widely used definitions are those of ISO 13528 (2005), the international standard for PT, which gives a quantitative definition of test-level bias and imprecision. An impressive performance of a method can be indicated by attaining the target clinical decision limit or complying with performance-based guidelines. ISO 13528 focuses on test-specific performance rather than results attained in routine analysis of patient samples. Thus, it may not accurately assess the influence of a method on patient results [6,7].

Significance of Investigating Poor Performance

Suppose these investigations of PT samples are to be effective. In that case, it will be necessary to know what evidence is that a particular result in a PT sample is incorrect. Usually, this will be implicit from how the sample has been 'solved' at the organizing laboratory; a discrepant result will be due to a certified difference between the target value and the result. 6Evidence can also be sought using the sample plus more general data from the scheme by trying to relate the performance of the sample to that in previous or subsequent schemes or to some related overall performance assessment in the laboratory, having first established what this represents. The ability to set up a PT sample investigation like any other analytical problem, varying the information available until the cost-effectiveness balance is right, is ideal. Yet, it is seldom possible to achieve this due to inadequate sample information or resources for a full investigation. However, an awareness of what evidence is available and the cost-effectiveness balance is necessary to avoid squandering resources on investigations that are doomed to failure [6].

Considerable thought should be given to investigating the causes of poor performance in proficiency testing. Such investigations consume considerable time and effort and add to the cost of external quality assessment - both for the laboratories concerned and for the organizers of the schemes. In one sense, it is a luxury to probe the errors identified in PT because, if resources were no problem, it would always be better to investigate and correct the causes of errors found in patient samples. However, as resources are finite, it is necessary to take a pragmatic view and use them where they can do the best. PT samples are artificial, and the clinical risk to the patient due to their error is either nil or minimal. The same (or very similar) samples are often used in EQA schemes. So, repeating the exercise for the same sample type to investigate and correct an error found in PT may not be the best use of resources. However, we argue that it is usually worthwhile to investigate PT samples that have led to a misreport because they reflect an error that exists or could exist in patient samples and harm patients [8-10].

Factors Contributing to Poor Performance

Analytical errors have been cited as one of the most common reasons PT samples are reported incorrectly. Sub-optimal analytical performance is usually due to a failure to calibrate or standardize the method. This may be due to a lack of understanding of the importance of these processes. Often, there is no immediate feedback that errors have occurred, which can give a false impression of method performance. Failure to use the calibration materials provided by the manufacturer can also lead to errors in PT sample analysis. PT samples will often have different analyte concentrations than those of patient samples [8-10]. The use of the patient's internal quality control ranges to determine the acceptability of results in PT is a common cause of failure when the two do not correlate. Participation in PT, of which the results are known, can lead to a bias in methodology for PT samples to gain a desirable result. This can occur through deliberate and conscious manipulation of PT samples or by implementing costly but unnecessary changes to data management and reporting systems. PT samples are often treated differently than patient samples due to the urgency of reporting results or time spent engaging in additional problem-solving and consultation to resolve unacceptable results. Failure to treat PT samples as routine patient material and failure to simulate real-time analysis procedures is often the cause of unsatisfactory performance [9-11]. PT analysis results in feedback, and implementing corrective actions are important when errors in PT results occur. Often, these processes are not implemented correctly or at all. In rare cases, poor PT performance has led to the withdrawal of a laboratory's testing service to avoid scrutiny from regulatory bodies and/or litigation from patients [9,11,12].

Analytical Errors

Analytical errors can exhibit highly variable effects in a scale of PT/CAP survey samples, both random and systematic. Random errors cause the data to be scattered about an average value. Range statistics, standard deviation, and coefficient of variation measure the scatter of the data and are used to describe the precision of a method. Random errors are reflected in increased imprecision and decreased accuracy in the measurement process. This is seen when a method that normally achieves satisfactory results begins to perform poorly. A cause of the random error is any event or condition that follows the sample, which results in the taking of an incorrect measurement on that sample (i.e., spuriously high potassium values due to hemolysis of a serum sample in transit resulting from a poorly packaged shipment). (8,10) In this case, the error occurred from failure to monitor an effective variable. Calibration errors are also classified as random errors, affecting the precision but not the accuracy of a measurement. If a systematic error is constant in both instances, it is possible to identify its presence when the laboratory plots its results on a Youdens Plot. It will be indicated by a result that falls significantly higher or lower than the true value. A change in the bias of the measurement will be detected by comparison with previous survey performance. This situation was observed in a clinical trial of patients presenting with septic symptoms. Despite no empirical evidence, the treating teams were convinced that the CRP of a patient should be vastly elevated in typical cases. The laboratory, therefore, increased the CRP reference range, and the data was compared with the previous cohort. Any significant change will be ultimately confirmed as an event of systematic error [10,12,13].

Instrument Calibration

While implementation of such recommendations on a widespread and sustainable basis will require significant resources and depend upon the availability of suitable PT materials, it is a neglected area that offers potential for improving the accuracy of results in some specific analytes. In summary, instrument calibration and monitoring for bias are key factors affecting performance in a wide range of assays, and laboratories need to consider using PT-based approaches to assess their current practice in this area. PT organizers must work with clinical scientists and regulatory bodies to provide appropriate support and guidance. Failure of a laboratory to achieve acceptable performance may well indicate the need to optimize the calibration of a method rather than any shortcomings in the day-to-day process of sample and assay [14-17]. Recommendations have been made for using PT materials to monitor instrument bias in specific assays, particularly when there is evidence of method differences in specimen types and populations. This is a complex issue, particularly when disease states may be defined by specific assay results and clinical decisions based on these results. PT materials targeted at monitoring bias must be commutable for the samples likely to be encountered in routine practice, easily measurable by various methods, and reflect the clinical measurement interval for the assay. (18) The importance of instrument calibration has been amply demonstrated in clinical chemistry methods subjected to standardization procedures. This was seen in the early days of cholesterol measurements when calibration of methods to reference methods resulted in very significant changes in reported values for the same specimens. More recently, changes in HbA1c measurement units to the IFCC scale have caused comparable shifts in reported values from the same specimens analyzed in different laboratories using different methods and have demonstrated the importance of comparability and the accuracy of results [19-21].

For the results to be accurate, laboratory instruments must be precise. Specially designed PT materials to assess instrument bias are not common in current PT schemes. However, while precision is a fundamental feature of all laboratory procedures and indicates a method's capability, proficiency testing measures the total effect, including both precision and bias [22]. Reagents used in the clinical chemistry laboratory must be of high quality. Because PT materials are often assayed by a method that includes comparison to a reference method (e.g., HPLC, GCMS, ICP-MS, IDMS) or a higher order material (e.g., atomic absorption, titrimetry), any bias in the value assigned to the PT material will impact upon the laboratory's analyte result. One source of error or bias potentially leading to inaccurate assignment of analyte values to PT materials is inappropriate or deteriorated reagents. This error may occur irrespective of whether the laboratory result is traceable to the PT material due to the state of the art in the assay; with the assignment of target values by peer groups for the commutable materials, the importance of this issue is increased [23].

Sample Handling and Storage

Sample mishandling and improper storage were often quoted as problems faced in the pre- and post-analytical phase and when incorrect results were reported. This study identified sample handling and storage as problems relating to poor PT performance [84-86]. In one instance, water contamination of a sample was traced back to a hole in the laboratory's roof. 87Unfortunately, the laboratory staff were unaware of the problem or the extent of the water damage to the sample until the EQA provider informed them of the outlier result [24, 25].

Operator Competence

The effect of poor operator technique and lack of experience on PT performance has been studied by collecting information from participating laboratories. Evidence of the Directorate General XII of the European Communities programs shows that high SD between results submitted by participants and the consensus mean result for a plasma pool generally agreed as outlier performance is associated with the employment of untrained staff in the analysis of CPD specimens [26,27]. The main problems encountered, which are due to insufficient staff training and experience, include failure to remove high molecular weight substances from ultrafiltration membranes before re-equilibration, interchanging serum and urine specimens, not following the correct analytical procedure, e.g., incorrect reagent dilution, and inability to make a clinical decision on whether a result is likely to be incorrect due to analytical error. Similar problems arose in the Scandinavian survey, where poor performance was significantly associated with staff having less than 2 years of experience in 6 of 13 subspecialties in medical biochemistry [15,28,29-31].

Mistakes made by entry-level personnel, which can drastically affect test results, are more likely to occur when staffing problems occur. In the USA, a survey conducted by Mallows et al. found that financial constraints imposed by managed care had forced many laboratories to lay off qualified personnel and fill vacancies with technical and clerical staff with little or no laboratory work experience. The evidence suggests that this shift in staffing levels may have led to increased errors and adverse events, detrimentally affecting patient safety. This is a worrying trend and one that has been recognized internationally. In response, the UK Council for Professions Supplementary to Medicine has set out a policy for the training and development of HCS staff, which should be closely adhered to if patient safety is not to be compromised. The influence of staffing levels in laboratory error is of further concern for CPD analysis, as some laboratories may use a general clinical chemist, who may leave the post because of retirement or career advancement, resulting in a replacement with a specialist in a different area of medical biochemistry. A decrease in error may be seen if medical biochemists reporting poor performance in subspecialties outside their area of training change to the analysis of tests in their field of knowledge [32,33].

Pre-analytical Errors

The most common errors that cause poor PT performance are misidentification of patient samples, deteriorated or contaminated samples, inappropriate sample collection, unsuitable test requests, or incorrect test selection. Sample mix-ups are common within clinical laboratories and may go undetected unless an unexpected PT result is obtained. It can occur within or outside the laboratory when samples are taken to the laboratory and left in a location where other patient samples are also present, for example, a ward or a doctor's surgery. The effect of a mixed-up sample is that the laboratory result will not be for the true patient and can cause downstream negative effects for the patient whose sample was incorrectly analyzed. In some cases, this type of error may be discovered if patients' samples are labeled in any way that allows the sample identity to be electronically verified and tracked through any automated analyzer [34-36].

Special attention should be given to the difference between pre-analytical errors and the other types of errors, which are problems that occur before the diagnostic analysis. On the other hand, analytical errors occur during testing, and post-analytic errors occur after testing. When investigating poor PT performance, it is only possible to determine that an error is likely to have occurred. It is not always possible to definitively diagnose the exact problem. Several systematic techniques can be used to identify the nature of specific errors. These include reviewing the entire testing process, seeking any anomalies, parallel testing, blind split testing, and standard addition of known analytes at different concentrations to patient samples. These methods involve re-testing patient samples while manipulating test conditions and comparing results to determine where a problem may have occurred. (6,34,35,37)

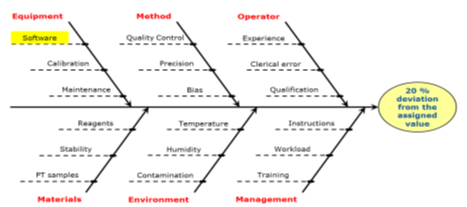

Post-analytical Errors

The Dempster review reported that deviations from preceding qualitative elements could lead to post-analytical errors that contribute to diagnostic errors. One of the main functions of the clinical biochemistry laboratory is to provide accurate and reliable data, and this is achieved through the utilization of biological variation data and other available evidence to set analytical quality specifications, which are then used to measure the performance of an analyte or measurement procedure. This can be assessed during internal quality control, during which the laboratory will measure the precision of the data gathered. Here, they use the guidelines provided by biological variation data to decide whether the precision of an analyte is acceptable, with limits set to designate a desirable or minimum standard [37, 38]. Although imprecision is the main focus of biological variation when assessing analytical performance, accuracy is also assessed through bias, and this is a key area where post-analytical errors can be identified. External quality assessment is a procedure used in the UK and other countries worldwide to assess and improve the performance of a laboratory in the analytical phase by having a second party assess the quality of the results of tests made by the first party. This is largely based on proficiency testing, where a national scheme provider sends simulated patient samples to the laboratory, which will then peer-review the laboratory's performance [34,36-38]. When the laboratory investigates the poor performance of an analyte in a proficiency test, one process that differentiates between analytical and post-analytical errors is to compare the analyte's performance with the biological variation data or a set of predefined analytical quality specifications. This process may reveal that an acceptable le level of precision and trueness has been achieved, demonstrating that the cause of the poor performance is an error in the interpretation of the clinical information given, or it may show that the analyte has deviated from the quality specifications indicating an analytical error [34,39,40]. Other methods to distinguish between the two error types include a review of the analytical phase and how the data was obtained, and investigations often use a fishbone diagram to map out the various influences on the production of analyte data, as seen in a study by Carlos Rios. The understanding of the error type is crucial as a post-analytical error may have serious implications on the patient if the clinical decision is influenced by inaccurate or misinterpreted data, and it is, therefore, necessary to act on the error and attempt to improve the performance of the analyte. This is evident by the recent decision by the Australian government and the Institute of Clinical Pathology and Medical Research to underpin all of the pathology industry in Australia with a quality framework based on Risk Management and the recent publication of an ISO standard for medical laboratories based around the same thing [34,39,41].

Sample mishandling and improper storage were often quoted as problems faced in the pre- and post-analytical phase and when incorrect results were reported. This study identified sample handling and storage as problems relating to poor PT performance [24,25,26]. In one instance, water contamination of a sample was traced back to a hole in the laboratory's roof. (24) Unfortunately, the laboratory staff were unaware of the problem or the extent of the water damage to the sample until the EQA provider informed them of the outlier result [24-26].

Staff Competency and Training

Staff inexperience and lack of knowledge are significant issues leading to incorrect method selection or inappropriate test performance, resulting in calibration, control, and patient sample results differing from the true value. One way to combat this is through appropriate education and training. Staff should regularly attend appropriate training courses and be encouraged by laboratory management to strive for further education in clinical chemistry. Continuous education will improve staff competency, but proficiency testing serves as continuous education and competency improvement. Regular participation in an external PT program will force staff to identify and resolve issues in their analysis system, thus using PT analytes as a "practice" for testing new methods or troubleshooting existing ones. PT will also help determine whether a new staff member can perform testing independently [34, 42,43]. If a laboratory has been notified of unacceptable performance in PT, corrective action should be taken, and staff competency should be reassessed. It is necessary to establish performance criteria and set limits to determine whether a result is acceptable. This is often done by comparing a known true value to the laboratory result, effectively the same as PT using a patient sample. If the performance is still unsatisfactory, then that particular testing should be left until further training or verification of the method can be performed. In some cases, it may be necessary to replace staff [34,40,43]. Overall, the most important factor in staff competency and training is the attitude, common goal, and response to errors in the laboratory. This requires full cooperation between lab management, staff, and pathologists. Errors in analysis or PT must be met with emphasis on the need for error identification and resolution and not punishment. The laboratory management should determine the cause of the error and what action can be taken to prevent a recurrence and then catalyze the changes. PT provider contact can also be helpful, providing a resource to determine the correct result and the most appropriate method to obtain it [34,39,42].

Methods of Identifying Poor Performance

Internal quality control (IQC) in the laboratory can be an effective tool in identifying poor laboratory performance in between PT exercises. It can be more effective than comparing results directly to the assigned value as it will be more sensitive in identifying problems. IQC is defined as the set of procedures used to monitor the analytical process after it has been established that the process is adequate to maintain the quality of results. This is an ongoing method of PT monitoring, which can be just as effective in identifying patient problems and PT results. IQC methods can include setting control limits for the target of measurement, producing a control chart to monitor changes in the accuracy and precision of the target, or it can involve comparing an internal control material to another method of measurement to validate the method in addition to monitoring its long-term bias and imprecision [34,41,43]. Statistical analysis of proficiency testing (PT) results is recommended by the International Organization for Standardization (ISO) to identify poor laboratory performance. It is a simple and objective method of identifying outliers and trends in PT results. It has the advantage that the performance of the laboratory can be compared to an objective target, i.e., the assigned value, Δ. Various statistical methods have been employed to identify outlying PT results, such as modified z-scores and the Levey-Jennings quality control procedure. Z-scores are the most commonly used statistical method, with very few laboratories using other tests that can be just as effective, if not more so. There are many methods of calculating z-scores, many of which are inappropriate for PT results

Statistical Analysis of Proficiency Testing Results [8,10,40,44]

The error types analysis is still in development for the strategy to identify poor performance. The error can be defined as deviations from the true value caused by bias, imprecision, and total (random) analytical errors that decrease the probability that the result will be taken as no clinical change when it indicates significant clinical change. This condition usually leads to a failed or wrong decision in interpreting the patient's clinical data or medical decision, but it could be an unseen error. The step to identify the error type is to compare the laboratory results with the PT provider's and the other peer group's results. This analysis will need vast information from the PT provider and other peer groups. By comparing those results, a pattern of error types could be identified much better [45].

This is the easiest, lucid, and efficient way of identifying poor performance compared to peer groups. It is explained by mining various statistical information from the PT result, such as z-score, rate of correction, and error types for every analyte. The z-score is usually calculated using this equation: Z = (x - X) / s, where x is the laboratory result, X is the mean result, and s is the standard deviation. The z-score will measure how many standard deviations between the laboratory result and the mean result. The rate of correction could be calculated using a percentage. This calculation requires a specific value of the decision about the acceptability or non-acceptability of the rate. The rate of correct is the proportion between the number of proficiency results classified as correct divided by the number of proficiency testing events [46,47,48].

External Quality Assessment Programs

The result of the quality proficiency test from the participant, as seen from the graphic and table, doesn't show the real condition of the test. But, with the previous information, it can give suggestions and feedback towards the test so the next PT can be done with better preparation. The other statistical data processing can make a table comparing the glucose test from the participant with other participants and a histogram comparison of both tests. It's based on the graphical and table information about test quality from the participant, which can be easily approached [49,50].

With the data, a Clarke error grid consisting of the regression line of standard criteria vs participant results can be made. The picture below describes the glucose result of the participant compared with standard criteria and the obtained regression line. We obtain information about bias or systematic error from the intercept of the regression line, which is -73.105. If stated as LTC value, the glucose test has BTC since the regression line is nearing the continuum of standard criteria. Then, we calculate the correlation value with the real state correlation of 0.913 and SD of the regression line 7.402. These two values can be used to calculate the value of %TE from the formula 125%^√(1+r) (1-s), whereas s=√(n 2-1) ^-1∑ xi-1/n ∑(xi-1/n)^2 for the obtained n value and xi is the LTC value. After getting the value of TE current and slope from the regression line previous Std criteria-LTC, %TE uncertainty plot vs BTC can be made, which is the value of the slope line ±te/s√1-r^2 with an ellipse shape and the location of that glucose test point. Lastly, %bias and %TE report of the glucose test complete with a histogram and findings from the beginning [49,51-52].

To mediate work for quality improvement, each participant should be identified and occasionally give immediate suggestions for their program. As described previously in linearity analysis, the presence module total error estimation system can potentially suggest PT performance based on the standard criteria value. During the following module, several graph participants taken from PT result 2005 of participants with code CMS 331 will be displayed. The use of MS Excel combined with the 2005 Excel add-in program from [Link] to process the data aimed to simplify the statistical calculation of each test from the participant. The Excel data table filled with the coding of standard criteria and LTC and %bias values from the participant, which was processed with error grid conversion, can be seen as an xy scatter plot of each test. We take the example of the glucose test. (54-57)

Internal Quality Control Measures

Quality control is part of the quality management process focused on fulfilling quality requirements. To guarantee the reliability of the laboratory results, those results must be precise (free from random error) and accurate (free from both systematic and random error). It is related to the testing process in that precise and accurate results are based on properly functioning reagents and instruments and on technicians who follow correct procedures. Errors in test results can occur due to faulty procedures, inexperience of the technician, or improperly functioning equipment [59-61]. To find and correct errors as they occur, the clinical chemist needs reliable and rapid methods to assess the precision and accuracy of the testing process. Control materials are used to monitor precision and accuracy on a day-to-day basis. Precision is assessed using control charts, where it is expected that 1/3 of results will fall outside the range ±2s, and 1/20 will fall outside the range ±3s (where s is the standard deviation of the results). A large group of control results should form a normal distribution. Multiple control materials at different concentration ranges should be tested. Accuracy is assessed by comparing the laboratory's results to the true value of the control material. If the control material is human blood or urine, it may enrich known samples, and an assay on patient samples can double as an accuracy assessment [62-66]. Any detected imprecision or inaccuracy must be promptly dealt with. Reagents, equipment, and technician methods must be evaluated and corrected, and if errors persist, new reference ranges may be established. Control material analysis is a form of internal quality assessment, and to ensure that the overall quality of testing is acceptable, the clinical chemist should participate in external quality assessment programs [65,67,68].

Review of Control Charts

The initial step in any investigation involves identifying the issue, such as discovering any abnormal patterns or changes in the test performance. The laboratory conducts a wide variety of tests, and to enhance efficiency and speed, data from multiple analytes may be examined simultaneously. However, for the EAP, each analyte should be evaluated individually. Established control charts are the most effective method for identifying significant alterations in test performance. Nelson's rules have been devised for numerous benchmarked EQUAS schemes, such as the Ricos trueness verification protocol, which considers biased and total allowable errors to measure systematic error. An online tool called the quality and error control expert (QUALEX) has been specifically developed to identify analytical errors in EQUAS data. QUALEX offers an advantage over other Shewhart chart rule systems because it allows for easy inclusion or modification of rules to investigate specific errors derived from EQUAS data results [69-72].

This sets out the guidelines to be followed by the laboratory whenever EQUAS-like data is analyzed. The EQUAS scheme aims to monitor the results of routine clinical laboratory testing. Consequently, to truly reflect routine performance, all available data and control results should be included in the analysis. This inclusiveness is a fundamental difference between an evaluation and an investigation. The more failures there are to include relevant information, the more the investigation becomes an evaluation of the failure itself. Any investigation must find problems that can be corrected rather than just identifying failure. The findings should be of value to other laboratories that may be experiencing similar problems, even though the specifics of the problems may differ. The quality coordinator will conduct All data analysis and may delegate specific tasks to other staff with suitable expertise. [73-75].

External Quality Assessment Programs

Probability-based models and multilevel regression are used to evaluate the EQA and the potential effects of covariates. This new evaluation method eliminates further costs and time and can help assess systematic and random errors in data generated from PT events. This more recent approach to post-analytical PT data assessment has provided the opportunity to fully understand errors that occur during PT events and assess the impact and outcome of corrective action [16,76-79]. Adverse analytical events (AAE) have been defined as medical laboratory errors detected before, during, or after using laboratory information to make decisions for patient care. This led to the development of a new method in which PT samples do not require formal testing to obtain results. Instead, they observe patient samples or retrospective data that have used the same tests, methods, and reagents on a routine basis. This will allow for the comparison and analysis of data collected from AAE with the PT event data [80-82]. The two most commonly applied methods of external quality assessment for PT programs involve the post-analytical approach and the data and their data evaluation. The post-analytical approach involves re-testing PT materials in routine diagnostic laboratory conditions and comparing these results with those obtained during the PT event. Any discrepancies in results can then be investigated, and corrective action can be taken. This approach effectively determines the cause of error but is expensive and time-consuming [83-86]

Peer Review and Inter-laboratory Comparisons

This method of identifying sub-standard performance is probably one of the best, but regrettably, it is rarely done. The idea is that data on methods and results of a particular analysis can be shared between two or more laboratories. This data can then be critically evaluated. In doing so, laboratories could identify and correct local problems in their analytical process. The main criticism of this method is that it has proved too costly for many laboratories to undertake and has a poor turnaround time. Since PT has become increasingly easier and cheaper to access, it may be feasible to begin implementing PT comparisons as a mandatory part of a PT program. An example of how successful an inter-laboratory comparison can be as a tool of improvement can be seen in the Swiss Vitamin C PT program. This program included a matrix of 4 different lyophilized sample types, each distributed to the participating laboratories. An evaluation of the results from this trial showed significant improvement in performance regarding accuracy towards the consensus mean [87,88,89]. As we can see, there are several ways that laboratories' performance can be identified and improved upon. PT results provide an undeniably good data source that will allow tailored guidance to laboratories, as it is often difficult to see the areas of weakness in the analytical process. The main problem at this point is associating poor performance with the cause of error and rectifying the problem, which in many cases will be a lack of motivation due to little consequence of failure. If laboratories are unable to apply measurable suggestions and witness improvement, there is little chance their performance will change [90].

Strategies for Addressing Poor Performance in Laboratory Testing

A strategy that seems to be often overlooked is the progression from addressing poor performance to continuous improvement. Most case studies look at how to return to an acceptable level of performance. This is usually due to a lack of perceived benefit from comparing the effectiveness of different strategies against each other and the cost of implementation. One case study demonstrating the opposite approach is investigating poor performance in the pilot scheme of a new EQA program for cystic fibrosis genetic analysis. This was to replace their current method, and it was decided that there would be a greater long-term benefit to investigating and addressing the poor performance as it would affect the laboratory's ability to offer reliable testing for cystic fibrosis patients. The expectation was to identify the cause of failure and an opportunity to compare different strategies for successfully implementing the new method [91,92]. Considering the occurrence of poor performance in subsequent PT schemes, an important consideration for an institution is using resources to investigate and address the poor performance. This may involve cessation of routine work to free staff and time to investigate the cause of poor performance and satisfactory completion of the investigation to enable changes to be implemented. This may have cost implications if performance in PT is linked to financial incentives or reimbursement rates, and loss of revenue triggers a sequence of events, eventually costing more than the original lost revenue. An approach such as this was taken by the Chemistry Laboratory at Emory Healthcare and can be related to their decision to withdraw from accreditation and reapply later. Changes implemented due to the investigation must effectively ensure errors are not repeated. This may involve a trial-and-error approach to find the most appropriate corrective action. An example given in the case studies is the change from reagent rental to purchase to improve the calibration and maintenance of equipment. [93,94].

Thus, readers should understand why laboratories show poor performance in PT, what the procedures involved, and who is responsible for outlining strategies to address poor performance. They should also understand how some of the case studies presented in other sections could have been approached differently. [94,95]

Root Cause Analysis

An RCA model looks for underlying reasons or "root causes." Thus, it extends beyond pinpointing the root causes of performance, quality, or safety issues. The fundamental cause of an issue that has arisen or a deviation or non-conformance that has been found has been identified as the root cause. A variation may have one or more underlying causes. To improve systems or processes, one has to understand the underlying cause. If the root cause of the issue is not found and fixed, it is likely to recur or, at most, affect other parts of the system or process. The goal of traditional linear root cause analysis [RCA] models has been to identify one or a few fundamental causes [94,95]. The significance of the analysis, the frequency of disappointing results, and the presence of trends will all influence how in-depth the investigation becomes. The lab ought to verify if the PT report explains the subpar performance. A progressive approach is recommended to increase the likelihood of identifying the problem's core cause in the absence of a justification [96,97]. The staff who performed the analysis, as well as, if applicable, the management of the laboratory, ought to be involved with the following steps of the inquiry: Analyze the raw data, data from internal quality control, any patterns from earlier PT rounds, and the participants' overall performance in that round; Create a plan for corrective measures after the inquiry is finished, taking into account any implications for earlier test results; carry out and document the remedial measure or actions; Verify the efficacy of the corrective remedy or actions [95,96].

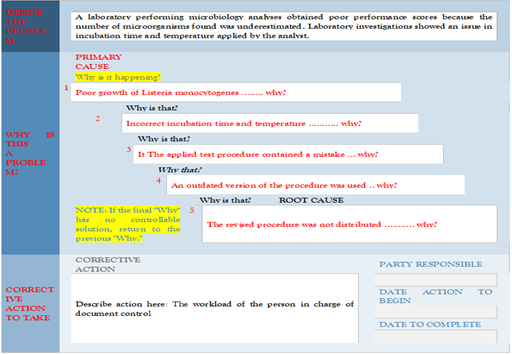

The staff who carried out the analysis as well as, if applicable, the management of the laboratory, should be involved in the following steps of the investigation: Examine the raw data, data from internal quality control, any patterns from earlier PT rounds, and the participants' overall performance in that round; Create a plan for remedial action after the investigation is finished, taking into account any implications for earlier test results; take out and document the remedial measure or actions; Verify the efficacy of the corrective solution or actions. Problems related to the PT scheme. Poor performance could also be due to the PT scheme not being entirely appropriate. The Eurachem Guide provides information on selecting an appropriate PT scheme. In other cases, a problem may have occurred with the PT items. The laboratory is encouraged to discuss its findings with the PT provider [95,96]. The “Fishbone diagram” or “5 Whys” are useful tools to investigate the root cause of a problem Figure 1,2.

Figure 1: A hospital laboratory has provided results in a PT scheme for tumor markers in serum with a 20 percent deviation from the assigned value, although the daily internal controls performed well.

The laboratory investigated the process and discovered the mistake was due to an incorrect unit conversion factor introduced for PT results when the IT system was last updated. Since patient results are reported in a different unit than the one used by the PT provider, no patient results were affected [95,96].

A laboratory performing microbiology analyses obtained poor performance scores because the number of microorganisms found was underestimated. Laboratory investigations showed an issue with the incubation time and temperature applied by the analyst. The laboratory found that the analyst used an out-of-date procedure [95,96].

1. Poor growth of Listeria monocytogenes ........ why?

2. Incorrect incubation time and temperature ........... why?

3. The applied test procedure contained a mistake ... why?

4. An outdated version of the procedure was used .. why?

5. The revised procedure was not distributed ........... why?

The workload of the person in charge of document control

Figure 2: The 5 Whys diagram are useful tools to investigate the root cause of a problem.

Corrective and Preventive Actions

Steps of corrective and preventive action: (i) Define the event Make an objective for the event to identify the problem and measure the effectiveness of improvement at the end of preventing the event. (ii) Assemble a team. Select an active team or assign responsible personnel to identify the cause of an event. The team must work together in root cause analysis to ensure a systematic understanding of another event's cause and effect. (iii) Find out the root cause. Use tools in RCA, such as a fishbone diagram and 5 why, to assist in understanding the event cause. This will enable easier planning of corrective and preventive action [97-99]. (iv) Determine the corrective action. Ensure the action implemented only rectifies the event's cause and does not change any other part that might affect the rectified event. A good plan will be specific, realistic, with a timeline, systematic, and measurable; it involves basic principles such as "What will be done?" and "How is it going to be achieved?" Corrective action is an action taken to rectify and eliminate the cause of actual poor performance in a running event to prevent the occurrence of such an event in the future. In contrast, preventive action is an action to avoid such an event [98-100]. Several measures can be taken to address poor performance to run preventive and root cause action, such as removing the personnel, modifying the method and routine, purchasing and installing a new instrument, and providing additional training and education. All of the measures should be to increase the testing accuracy of the event. Preventive action should be created as a policy that enables the improvement taken to be long-lasting and not just solve the event only. These actions must be monitored, and their effectiveness should be assessed simultaneously. [100,101].

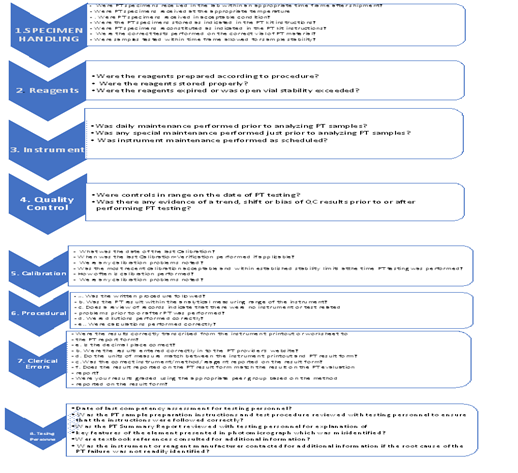

Figure 3: Check Steps for Proficiency Testing Corrective Action

Preanalytical Errors: A "No" response may indicate specimen handling issues, often due to failure to read PT kit instructions.

Analytical/Procedural Errors: A "No" response may suggest analytical or procedural errors, possibly due to not performing recommended instrument maintenance/calibration or not following written test procedures. Review PT kit instructions or standard operating procedures as needed.

Clerical Errors: A "No" response may point to clerical mistakes. Though different from patient result reporting, these errors may require additional staff training, instruction review, adding a second reviewer, or investigating the reporting format. Contact your proficiency testing provider if the reported results do not match the evaluation report.

Continuous Improvement Initiatives

The choice of which projects to undertake is important, and FOCUS-PDCA is a decision-making tool that can be used in selecting and managing improvement projects. This structured problem-solving methodology splits the process into two main phases and has a systematic review built in at each stage to judge whether the continued effort is worthwhile. First, "Focus" involves defining the problem and setting an objective that must be achievable, and then "PDCA," which stands for "Plan-Do-Check-Act," is the process for reaching the objective. At each instance of "Act," the results should be reviewed to determine whether to proceed to the next step [101-103]. By conducting a "Check" at the end of the first phase, it can be determined whether the issue is within the realm of influence of the laboratory and if the problem definition and objective are correct. This is important as it avoids wasted effort, and the "Check" or review is a recurring theme similar to establishing the cause of issues in the CAPA process. By performing continuous improvement initiatives, general problem-solving will resume, and the findings from RCA and CAPA can be implemented into changes in processes and monitored for their effectiveness [101,103]. Incorporating continuous improvement into the culture of the laboratory is essential for ensuring that corrective actions are appropriate and effective and that performance is maintained or enhanced in the long term. However, to be effective, a structured program of projects or initiatives driven by information from the past aimed at improving processes should be established. This is in contrast to a strategy of relying on "firefighting," in which action is taken only in response to an issue and frequently results in ill-directed effort. An example of this is where a sudden peak in failure of a particular analyte triggers a flurry of activity directed at troubleshooting the analytical system for that analyte. Yet, the cause of the problem may be found in the pre-analytical phase or even in how the EQA samples are received and managed [102,103].

Staff Education and Training

One of the most significant factors in causing errors is the relative lack of training given to laboratory personnel. This contrasts the complexity of the tasks they are expected to perform. In the RT scheme, assessments are made of laboratory staff competency and its relation to laboratory performance. The methodology used in this has been to measure the cognitive skills of the laboratory staff, i.e., the ability to understand and answer questions on the theory underpinning a given clinical biochemistry assay. In the UK, laboratory employees are now expected to know the underlying principles of clinical biochemistry [102-105]. This follows the successful implementation of the ACB National Vocational Qualifications [NVQ] scheme, a well-structured, certificated form of training with defined objectives recently incorporated into the IBMS training program. Third, the increasing dependence of laboratories on automation will help reduce human error. Still, it raises machine-related mistakes, necessitating a higher level of staff expertise to diagnose and remedy these when they occur. After assessment of the training and educational needs of laboratory personnel, it is recommended that public awareness of the importance and complexity of laboratory medicine be increased to encourage the provision of adequate training resources. Steps should be taken to assign responsibility for RT and implement its findings. An RT coordinator could be appointed for a given clinical area, e.g., diabetes, to monitor changes in performance, maintain contact with PT providers, and investigate any variability in laboratory performance seen between different types of samples. This work cannot take place without the necessary funding, so laboratory managers and clinicians must be made aware of the benefits and cost-effectiveness of RT through the provision of hard evidence as to its efficacy [5,104,105].

Conclusion

In conclusion, this review underscores the importance of investigating and addressing unacceptable results in proficiency testing among medical laboratories. Such results indicate potential issues in laboratory performance, with implications for patient care and safety. To mitigate these challenges, laboratories must implement robust quality assurance measures, including staff training, adherence to standardized procedures, and regular equipment maintenance. Participation in proficiency testing programs is also vital, providing valuable insights for continuous improvement. Collaboration among laboratories and ongoing monitoring is essential for identifying and addressing common challenges, ultimately enhancing the quality and reliability of laboratory test results for improved patient outcomes.

References

- Alam, F., Saha, N., Islam, M., Ahmed, M., & Haque, M. (2022). Perception on environmental concern of pesticide use in relation to farmers’ knowledge. Journal of Environmental Science and Natural Resources, 13(1-2):94-99.

Publisher | Google Scholor - Benti, D., Birru, W., Tessema, W., & Mulugeta, M. (2022). Linking cultural and marketing practices of (agro)pastoralists to food (in)security. Sustainability, 14(14): 8233.

Publisher | Google Scholor - Bk, A., Hb, A., Birhane, H., & Ge, S. (2021). On farm reproductive performance and trait preferences of sheep and goat in pastoral and agro-pastoral areas of Afar regional state, Ethiopia. Journal of Animal Science and Research, 5(1).

Publisher | Google Scholor - Catley, A., Arasio, R., & Hopkins, C. (2023). Using participatory epidemiology to investigate women’s knowledge on the seasonality and causes of acute malnutrition in Karamoja, Uganda. Pastoralism Research Policy and Practice, 13(1).

Publisher | Google Scholor - Daly, Z. (2023). Food-related worry and food bank use during the COVID-19 pandemic in Canada: Results from a nationally representative multi-round study. BMC Public Health, 23(1).

Publisher | Google Scholor - Denkyirah, E., Okoffo, E., Adu, D., Aziz, A., & Ofori, A. (2016). Modeling Ghanaian cocoa farmers’ decision to use pesticide and frequency of application: The case of Brong Ahafo Region. SpringerPlus, 5(1).

Publisher | Google Scholor - Emeribe, C. (2023). Smallholder farmers perception and awareness of public health effects of pesticides usage in selected agrarian communities, Edo Central, Edo State, Nigeria. Journal of Applied Sciences and Environmental Management, 27(10):2133-2151.

Publisher | Google Scholor - Eshbel, A., Adicha, A., Tadesse, A., Tadesse, A., & Gebremeskel, Y. (2023). Demonstration of improved banana (William-1 variety) production and commercialization in Nyanghtom District of South Omo Zone, Southern Ethiopia. Research on World Agricultural Economy, 4(3):15-24.

Publisher | Google Scholor - Gamage, V., Samarakoon, S., & Malalage, G. (2022). The impact of pesticide sales promotion strategies on customer purchase intention. Sri Lanka Journal of Marketing, 8(2):84.

Publisher | Google Scholor - Gatew, S. (2024). Livelihood vulnerability of Borana pastoralists to climate change and variability in Southern Ethiopia. International Journal of Climate Change Strategies and Management, 16(1):157-176.

Publisher | Google Scholor - Gebru, G., Ichoku, H., & Phil-Eze, P. (2020). Determinants of smallholder farmers' adoption of adaptation strategies to climate change in Eastern Tigray National Regional State of Ethiopia. Heliyon, 6(7):e04356.

Publisher | Google Scholor - He, Q. (2023). How to promote agricultural enterprises to reduce the use of pesticides and fertilizers? An evolutionary game approaches. Frontiers in Sustainable Food Systems, 7.

Publisher | Google Scholor - Hirsi, S., Husein, A., & Awmuuse, A. (2021). Determinants of agro-pastoral households' livelihood diversification strategies in Awbare District, Fafan Zone of Somali State, Ethiopia. International Journal of Agricultural Economics, 6(6):256.

Publisher | Google Scholor - Hu, Z. (2020). What socio-economic and political factors lead to global pesticide dependence? A critical review from a social science perspective. International Journal of Environmental Research and Public Health, 17(21):8119.

Publisher | Google Scholor - Ibrahim, S., Özdeşer, H., Çavuşoğlu, B., & Shagali, A. (2021). Rural migration and relative deprivation in agro-pastoral communities under the threat of cattle rustling in Nigeria. Sage Open, 11(1).

Publisher | Google Scholor - Idrissou, L., Sacca, L., Imorou, H., & Gouthon, M. (2020). Farmers and pastoralists participation in the elaboration and implementation of sustainable agro-pastoral resources management plans in Northern Benin. Asian Journal of Agricultural Extension Economics & Sociology, 34-44.

Publisher | Google Scholor - Jahan, S., Mozumder, Z., & Shill, D. (2022). Use of herbal medicines during pregnancy in a group of Bangladeshi women. Heliyon, 8(1):e08854.

Publisher | Google Scholor - Jimmy, K., Edja, A., & Djohy, G. (2023). Appropriation of mobile phones in rural African societies: Case study of the Fulani pastoralists in Northern Benin. Information Development, 026666692311775.

Publisher | Google Scholor - Khan, M. (2022). Using the health belief model to understand pesticide use decisions. The Pakistan Development Review, 941-956.

Publisher | Google Scholor - Khan, M., & Damalas, C. (2015). Farmers' knowledge about common pests and pesticide safety in conventional cotton production in Pakistan. Crop Protection, 77:45-51.

Publisher | Google Scholor - Lima, J. (2022). First national-scale evaluation of temephos resistance in Aedes aegypti in Peru.

Publisher | Google Scholor - Liu, D., Huang, Y., & Luo, X. (2022). Farmers’ technology preference and influencing factors for pesticide reduction: Evidence from Hubei Province, China. Environmental Science and Pollution Research, 30(3):6424-6434.

Publisher | Google Scholor - Lu, J. (2022). Knowledge, attitudes, and practices on pesticide among farmers in the Philippines. Acta Medica Philippina, 56(1).

Publisher | Google Scholor - Lyu, F. (2023). The impact of anthropogenic activities and natural factors on the grassland over the agro-pastoral ecotone of Inner Mongolia. Land, 12(11):2009.

Publisher | Google Scholor - Macharia, I., Mithöfer, D., & Waibel, H. (2012). Pesticide handling practices by vegetable farmers in Kenya. Environment Development and Sustainability, 15(4):887-902.

Publisher | Google Scholor - Mbada, C., Olakorede, D., Igwe, C., Fatoye, C., Olatoye, F., Oyewole, A., … & Fatoye, F. (2020). Knowledge, perception, and use of medical applications among health professions’ students in a Nigerian university. Journal of Medical Education, 19(2).

Publisher | Google Scholor - Mergia, M., Weldemariam, E., Eklo, O., & Yimer, G. (2021). Small-scale farmer pesticide knowledge and practice and impacts on the environment and human health in Ethiopia. Journal of Health and Pollution, 11(30).

Publisher | Google Scholor - Mohamed-Brahmi, A. (2024). Analysis of management practices and breeders’ perceptions of climate change’s impact to enhance the resilience of sheep production systems: A case study in the Tunisian semi-arid zone. Animals, 14(6):885.

Publisher | Google Scholor - Nwachukwu, C. (2023). Green agriculture and food security, a review. IOP Conference Series Earth and Environmental Science, 1178(1):012005.

Publisher | Google Scholor - Nwadike, C., Joshua, V., Doka, P., Ajaj, R., Hashidu, U., Gwary-Moda, S., & Moda, H. (2021). Occupational safety knowledge, attitude, and practice among farmers in Northern Nigeria during pesticide application—a case study. Sustainability, 13(18):10107.

Publisher | Google Scholor - Okidi, L., Ongeng, D., Muliro, P., & Matofari, J. (2022). Disparity in prevalence and predictors of undernutrition in children under five among agricultural, pastoral, and agro-pastoral ecological zones of Karamoja sub-region, Uganda: A cross-sectional study. BMC Pediatrics, 22(1).

Publisher | Google Scholor - Olawumi, A., Grema, B., Suleiman, A., Michael, G., Umar, Z., & Mohammed, A. (2022). Knowledge, attitude, and practices of patients and caregivers attending a northern Nigerian family medicine clinic regarding the use of face mask during COVID-19 pandemic: A hospital-based cross-sectional study. Pan African Medical Journal, 41.

Publisher | Google Scholor - Otitoju, O., Adondua, M., Emmanuel, O., & Grace, O. (2022). Risk assessment of pesticide residues in some samples of carrots (Daucus carota). International Journal of Advanced Biochemistry Research, 6(2):42-48.

Publisher | Google Scholor - Palomino, M., Pinto, J., Yañez, P., Cornelio, A., Dias, L., Amorim, Q., … & Lima, J. (2022). First national-scale evaluation of temephos resistance in Aedes aegypti in Peru. Parasites & Vectors, 15(1).

Publisher | Google Scholor - Pattnaik, M., Nayak, A., Karna, S., Sahoo, S., Palo, S., Kanungo, S., … & Bhattacharya, D. (2023). Perception and determinants leading to antimicrobial (mis)use: A knowledge, attitude, and practices study in the rural communities of Odisha, India. Frontiers in Public Health, 10.

Publisher | Google Scholor - Pouokam, G., Album, W., Ndikontar, A., & Sidatt, M. (2017). A pilot study in Cameroon to understand safe uses of pesticides in agriculture, risk factors for farmers’ exposure and management of accidental cases. Toxics, 5(4):30.

Publisher | Google Scholor - Sewando, P. (2023). Climate change adaptation strategies for agro-pastoralists in Tanzania. Asian Journal of Advances in Agricultural Research, 21(2):30-39.

Publisher | Google Scholor - Spate, M., Yatoo, M., Penny, D., Shah, M., & Betts, A. (2022). Palaeoenvironmental proxies indicate long-term development of agro-pastoralist landscapes in Inner Asian mountains. Scientific Reports, 12(1).

Publisher | Google Scholor - Staveley, J., Law, S., Fairbrother, A., & Menzie, C. (2013). A causal analysis of observed declines in managed honey bees (Apis mellifera). Human and Ecological Risk Assessment: An International Journal, 20(2):566-591.

Publisher | Google Scholor - Tessema, R., Nagy, K., & Ádám, B. (2021). Pesticide use, perceived health risks and management in Ethiopia and in Hungary: A comparative analysis. International Journal of Environmental Research and Public Health, 18(19):10431.

Publisher | Google Scholor - Tessema, R., Nagy, K., & Ádám, B. (2022). Occupational and environmental pesticide exposure and associated health risks among pesticide applicators and non-applicator residents in rural Ethiopia. Frontiers in Public Health, 10.

Publisher | Google Scholor - Tofu, D., Fana, C., Dilbato, T., Dirbaba, N., & Tesso, A. (2021). The effects of climate change on the productivity of agropastoral systems in Ethiopia. Climate Change and Agricultural Sustainability, 12(4):205-214.

Publisher | Google Scholor - Tony, M., Ashry, M., Tanani, M., Abdelreheem, A., & Abdel-Samad, M. (2023). Bio-efficacy of aluminum phosphide and cypermethrin against some physiological and biochemical aspects of Chrysomya megacephala maggots. Scientific Reports, 13(1).

Publisher | Google Scholor - Wang, W., Wang, J., Liu, K., & Wu, Y. (2020). Overcoming barriers to agriculture green technology diffusion through stakeholders in China: A social network analysis. International Journal of Environmental Research and Public Health, 17(19):6976.

Publisher | Google Scholor - Wicht, A., Reder, S., & Lechner, C. (2021). Sources of individual differences in adults’ ICT skills: A large-scale empirical test of a new guiding framework. PLoS ONE, 16(4):e0249574.

Publisher | Google Scholor - Wylie, B., Ae-Ngibise, K., Boamah, E., Mujtaba, M., Messerlian, C., et al. (2017). Urinary concentrations of insecticide and herbicide metabolites among pregnant women in rural Ghana: A pilot study. International Journal of Environmental Research and Public Health, 14(4):354.

Publisher | Google Scholor - Xie, S., Ding, W., Ye, W., & Deng, Z. (2021). Agro-pastoralists’ perception of climate change and adaptation in the Qilian Mountains, China.

Publisher | Google Scholor - Xie, S., Ding, W., Ye, W., & Deng, Z. (2022). Agro-pastoralists’ perception of climate change and adaptation in the Qilian Mountains of Northwest China. Scientific Reports, 12(1).

Publisher | Google Scholor - Yang, X., Zhao, S., Liu, B., Gao, Y., Hu, C., Li, W., … & Wu, K. (2022). Bt maize can provide non-chemical pest control and enhance food safety in China. Plant Biotechnology Journal, 21(2):391-404.

Publisher | Google Scholor - Yilmaz, H. (2021). Economic and toxicological aspects of pesticide management practices: Empirical evidence from Turkey. International Letters of Natural Sciences, 81:23-30.

Publisher | Google Scholor - Zhan, P., Hu, G., Han, R., & Yu, K. (2021). Factors influencing the visitation and revisitation of urban parks: A case study from Hangzhou, China. Sustainability, 13(18):10450.

Publisher | Google Scholor - Zhou, H. (2024). Exploration of sustainable agro-pastoral integration development models in the Qinghai-Tibet Plateau. Highlights in Business Economics and Management, 33:587-593.

Publisher | Google Scholor