Research Article

In-Training Assessments of University Residents at Rawalpindi Medical University Pakistan

Assistant Professor Community Medicine, Rawalpindi Medical University, Rawalpindi, Pakistan.

*Corresponding Author: Rizwana Shahid, Assistant Professor Community Medicine, Rawalpindi Medical University, Rawalpindi, Pakistan

Citation: Shahid R, Fatima F, Umar M. (2024). In-Training Assessments of University Residents at Rawalpindi Medical University Pakistan. Journal of Clinical Medicine and Practice, BioRes Scientia Publishers. 1(1):1-07. DOI: 10.59657/jcmp.brs.24.007

Copyright: © 2024 Rizwana Shahid, this is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Received: June 20, 2024 | Accepted: July 19, 2024 | Published: July 24, 2024

Abstract

Objectives: To deeply analyze in-training assessment results of university residents and to compare the mean scores of the major training programs.

Subjects & Methods: A cross-sectional analytical study was done among 56 university residents who were enrolled in MS / MD training programs of Rawalpindi Medical University & Allied Hospitals. This was a record-based study with comprehensive analysis of in-training assessment-1st year results pertaining to all training programs. Assessments were taken during February 2024. Data analysis was done by using Microsoft Excel 2016. Descriptive statistics were applied. Mean scores of trainees employed in MD Medicine & Allied, MS Surgery & Allied, MS Obstetrics & Gynecology and MD Pediatrics were compared by independent sample t-test. P <0.05 was taken as significant.

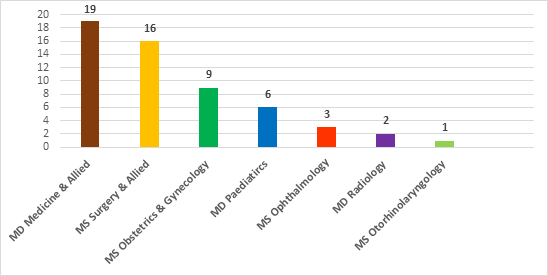

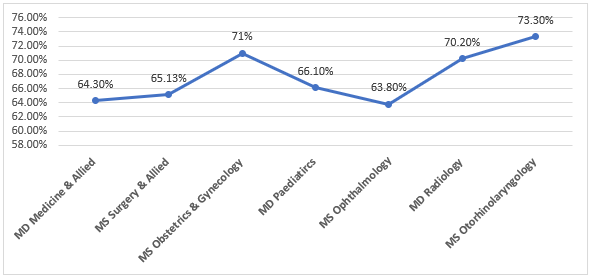

Results: Of the 56 trainees undergoing in-training assessment-1st year, most (19) were enrolled in Medicine & Allied training programs followed by 16 from Surgery & Allied programs, 9 form Obstetrics & Gynecology and 6 from Pediatrics. Trainees assessed in Ophthalmology, Radiology and Otorhinolaryngology were 3,2 and 1 respectively. Mean theory and OSCE scores of MD Medicine & Allied trainees were 63.6 ± 9 and 67.3 ± 8.2 respectively. mean theory and OSCE scores of MS Surgery & Allied trainees were 70.01 ± 8.9 and 60.74 ± 3.8 respectively. The mean OSCE score of MS Obstetrics & Gynecology trainees was relatively higher 72.8 ± 6.3 than that of theory. Both assessment scores of MD Pediatrics trainees were almost equal. Mean theory score of MS Surgery & Allied was comparatively greater than those of MD Medicine & Allied (P = 0.04) while OSCE score in MD Medicine & Allied was significantly higher (P=0.006). OSCE score of MS Obstetrics & Gynecology trainees was also significantly higher (P=0.0001) than those of MS Surgery & Allied. The overall assessment score of MS Otorhinolaryngology was 73.3% followed by MS Obstetrics & Gynecology score of 71%.

Conclusion: MD Medicine & Allied trainees must do hard work to enhance their medical knowledge while those of MS Surgery & Allied trainees should concentrate on enhancing their clinical competencies.

Keywords: in-training assessment-1st year; md medicine & allied; ms surgery & allied; ms obstetrics & gynecology; md pediatrics

Introduction

Assessments being an integral part of curriculum is of paramount significance to ensure trainees’ learning. This should be dealt with in a curriculum as a process of teaching and not as a separate entity [1]. Assessment should be ongoing to conform that teaching and learning is a dynamic process. Assessment along with specified learning outcomes of the curriculum and chalked out learning activities is imperative [2]. It is one of the pillars of education, the implementation of curriculum without which is perceived as impossible [3]. Certain guiding principles pertaining to assessment of trainees should strictly be followed for smooth execution of the whole educational process. Some of them are fairness of assessment, its communication to all students through various resource books and social links in addition to eligibility for assessment and provision of constructive feedback for improvement [4]. Although summative assessment is essential for awarding degree and certifying a student as competent; however, a there is a significant trend of emphasizing formative assessment of the students particularly postgraduate medical residents across the globe to promote their comprehensive learning. Therefore, deliberating the content of curriculum especially that linked with higher medical education is essential [5]. Here the importance of curricular mapping cannot be overlooked that not only ensure alignment of learning outcomes with teaching strategies and assessment tools but also facilitate in searching gaps and verify the compliance with accreditation models [6].

There is diversity in assessments across different degree awarding medical colleges and universities worldwide. In training assessments taken by College of Physicians & Surgeons Pakistan (CPSP) is one of the components of summative assessment that consists of yearly written assessment of the trainees and their Workplace Based Assessment (WPBA). It is different from Intermediate Module (IMM) examination that is another component of summative assessment and is basically a mid-residency assessment [7]. On the other hand, Royal College of Physicians & Surgeons Canda (RCPSC) suggests regular execution of in training assessment of the postgraduate medical residents for their formative assessment. Although there is more focus on small module based regular assessments; it also has many lacunae that need attention of the competent authorities [8]. Although A study was done by Ringsted C et al to analyze the confidence level among junior doctors post-in training assessment; the data related to analysis of in training assessments is quite scarce [9]. Many aspects are essential to make in-training assessments impactful like comfortable environment and credible feedback both from supervisors as well as trainees [10]. In training assessments of postgraduate trainees enrolled for training at Rawalpindi Medical University and its allied hospitals is therefore accomplished by respective Deans to confirm assessments at familiar workplace. The present study is intended to comprehensively analyze the results of theoretical as well as clinical assessments of the trainees that were taken on completion of 1st year. This study would enable us to recognize the deficiencies of the trainees that would later be brought under consideration and subsequent modification by the stakeholders if required.

Subjects & Methods

A cross-sectional descriptive study was done to comprehensively analyze the results of in-training assessment-1st year that was taken during February 2024 by respective Deans on completion of 1st year of MS / MD training at Rawalpindi Medical University (RMU) & Allied Hospitals. Trainees enrolled in MS / MD training programs are enrolled through Central Induction Policy (CIP) and are known as “university residents”. In-training assessments of these residents are taken following official notification of assessments by Examination department of RMU. Plan of In-training assessments in accordance with Revised curriculum and assessment scheme 2021 [11] is tabulated below:

Table 1: Revised Assessment Plan for university residents

| Assessments for residents enrolled in 4 years training program | Assessments for residents enrolled in 5 years training program |

| In training assessment- 1st year | In training assessment- 1st year |

| Mid Training Assessment (MTA) | Mid Training Assessment (MTA) |

| In training Assessment – 3rd year | In training Assessment – 3rd year |

| Final Training Assessment (FTA) | In training Assessment – 4th year |

| ------------------- | Final Training Assessment (FTA) |

In-training assessments at RMU & Allied hospitals are formative assessments with pass percentage of 50%. On getting score below 50%, trainees are not reassessed. However, two-way feedback is given due consideration and trainees with poor score are counseled by respective Deans for improvement. Total 56 trainees undergone in-training assessment-1st year that belonged to Medicine & Allied, Surgery & Allied, Obstetrics & Gynecology, Pediatrics, Radiology, Ophthalmology and Otorhinolaryngology. Their theoretical knowledge of “Knows How” level that primarily determines the application of knowledge as specified in Miller’s pyramid of assessment [12] was measured through Short Answer Questions (SAQs) and Short Essay Questions (SEQs) while clinical competencies were assessed by Objective Structured Clinical Examinations (OSCEs). However, trainees of MD Radiology were also subjected to Table viva apart from SAQs and OSCEs. The data analysis was done by Microsoft Excel 2016. Descriptive statistics were applied. Difference in mean scores of trainees from major specialties was statistically measured by applying independent sample t-test. P less than 0.05 was considered significant.

Results

Total 56 trainees were subjected to In-training assessment-1st year during February 2024 that were taken by their deans on completion of 1st year of training and fulfillment of respective requisites. Most of them (18) were from Medicine and Allied disciplines as revealed below in Figure 1.

Figure 1: Program-wise depiction of trainees subjecting to In Training Assessment-1st year

Total 19 trainees enrolled in Medicine & Allied training programs had In Training assessment-1st year during February 2024 and highest score e was achieved by trainee of MD Psychiatry as illustrated below in Table 1.

Table 2: In Training Assessment Results of Medicine & Allied trainees (n = 19)

| Sr. # | Medicine & Allied Training programs | No. of trainees assessed | Assessment scores in % | Overall average (%) | |

| Theory | OSCE | ||||

| MD Internal Medicine | 15 | 66% | 65.3% | 65.1% | |

| MD Emergency Medicine | 2 | 55% | 74.3% | 64.6% | |

| MD Psychiatry | 1 | 63% | 82% | 72.5% | |

| MS Cardiology | 1 | 47% | 63% | 55% | |

| Mean ± SD | 19 | 63.6 ± 9 | 67.3 ± 8.2 | ------ | |

Scores of medical knowledges as well as clinical competencies of Surgery & Allied trainees are presented below in Table 2.

Table 3: In Training Assessment Results of Surgery & Allied trainees (n = 16)

| Sr. # | Surgery & Allied Training programs | No. of trainee assessed | Assessment scores in % | Overall average (%) | |

| Theory | OSCE | ||||

| MS General Surgery | 10 | 71.35% | 59% | 65.1% | |

| MS Pediatric Surgery | 2 | 63% | 57.7% | 60.35% | |

| MS Orthopedics | 2 | 70.2% | 67% | 68% | |

| MS Plastic Surgery | 1 | 61.5% | 58.5% | 60% | |

| MS Neurosurgery | 1 | 84% | 61.5% | 72.2 | |

| Mean ± SD | 16 | 70.01 ± 8.9 | 60.74 ± 3.8 | ------ | |

Clinical as well as theoretical assessment scores of the trainees other than those working in Medicin and Surgery & Allied disciplines is illustrated below in Table 3.

Table 4: Assessment scores of the trainees from programs other than those of Medicine and Surgery & Allied training programs

| Sr. # | Training programs | No. of trainees assessed | Assessment scores in % | Overall average (%) | |

| Theory | OSCE | ||||

| MS Obstetrics & Gynecology Mean ± SD | 9 | 69.1% | 72.8% | 71% | |

| 69.1 ± 5.6 | 72.8 ± 6.3 | ||||

| MD Pediatrics Mean ± SD | 6 | 66% | 66.3% | 66.1% | |

| 66 ± 5.9 | 66.3 ± 8.2 | ||||

| MS Ophthalmology Mean ± SD | 3 | 66.3% | 61.3% | 63.8% | |

| 66.3 ± 6.1 | 61.3 ± 0.58 | ||||

| MS Otorhinolaryngology | 1 | 66% | 81.4% | 73.7% | |

Trainees of MD Radiology were also assessed through table viva apart from theory and OSCE as shown below in Table 4.

Table 5: In Training assessment-1st year results of MD Radiology trainees

| Training program | No. of trainees assessed | Assessment scores in % | Overall average (%) | ||

| Theory | OSCE | Table Viva | |||

| MD Radiology | 2 | 67.5 % | 76% | 67% | 70.2% |

Trend of In-training assessment-1st year results of the trainees enrolled in teaching hospitals of RMU is revealed below in Figure 2.

Figure 2: Trend of in training Assessment-1st year results

As Medicine and Surgery & Allied trainees assessed constituted the majority, so comparison of their theory and OSCE mean scores achieved during in training assessment-1st year was carried out by applying independent sample t-test as depicted below in Table 5.

Table 6: Comparison of Theory and OSCE mean scores of Medicines and Surgery & Allied trainees

| Assessments | Mean ± SD | P-value | |

| MD Medicine & Allied (n=19) | MS Surgery & Allied (n=16) | ||

| Theory | 63.6 ± 9 | 70.01 ± 8.9 | *0.04 |

| OSCE | 67.3 ± 8.2 | 60.74 ± 3.8 | *0.006 |

*Statistically significant difference

Likewise, difference in mean scores of medical knowledge and clinical competencies of the trainees enrolled in MS Obstetrics & Gynecology and MD Pediatrics was also statistically tested but was found out to be insignificant as shown below in Table 6.

Table 7: Comparison of mean theoretical and clinical scores of MS Obstetrics & Gynecology and MD Pediatrics trainees

| Assessments | Mean ± SD | P-value | |

| MS Obstetrics & Gynecology (n=9) | MD Pediatrics (n=6) | ||

| Theory | 69.1 ± 5.6 | 66 ± 5.9 | 0.32 |

| OSCE | 72.8 ± 6.3 | 66.3 ± 8.2 | 0.10 |

Difference in mean theory and OSCE scores of 2 major MD and 2 major MS programs’ trainees revealed highly significant difference (P = 0.0001) between mean theory and OSCE scores of MS Surgery & Allied and MS Obstetrics and Gynecology trainees only as depicted below in Table 7.

Table 8: Statistical difference between theory and OSCE scores of 2 major MD and 2 major MS programs’ trainees

| Assessments | Mean ± SD | P-value | |

| MD Medicine & Allied (n=19) | MD Pediatrics (n=6) | ||

| Theory | 63.6 ± 9 | 66 ± 5.9 | 0.54 |

| OSCE | 67.3 ± 8.2 | 66.3 ± 8.2 | 0.79 |

| Assessments | Mean ± SD | P-value | |

| MS Surgery & Allied (n=16) | MS Obstetrics & Gynecology (n=9) | ||

| Theory | 70.01 ± 8.9 | 69.1 ± 5.6 | 0.78 |

| OSCE | 60.74 ± 3.8 | 72.8 ± 6.3 | *0.0001 |

*Statistically significant difference

Discussion

On reviewing the trend of overall scores achieved during in-training assessment-1st year by university residents of RMU & Allied hospitals, the highest score (73.3%) was that of MS Otorhinolaryngology trainee followed by 71% of MS Obstetrics and Gynecology trainees and 70.2% MD Radiology trainees (Fig 2). On comparing the results of different assessment modalities, difference between theory and OSCE scores of MS Obstetrics and Gynecology trainees and MD Pediatrics trainees was statistically insignificant (Table 7). On the other hand, difference between OSCE scores only of MS Surgery & Allied trainees and MS Obstetrics and Gynecology was highly statistically significant as revealed in Table 8. On analyzing mean scores of the trainees enrolled in these programs, mean theory scores of MS Surgery & Allied was somewhat greater than those of MS Obstetrics and Gynecology while MS Obstetrics and Gynecology trainees got significantly higher score in OSCE depicting sufficient acquisition of clinical competencies by them (Table 8). As this was the assessment on completion of 1st year of training, this was in accordance with their Entrusted Professional Activities specified in their respective logbooks. A cross-sectional study carried out among Obstetrics & Gynecology trainees belonging to Denmark, Sweden and Norway revealed discrepancies between level of training and degree of confidence pertaining to doing ultrasound of the patients independently [13]. A study by Salvesen KA et al among European trainees revealed that deficits in promoting competency base medical education are attributed to lack of reliability in training standards across the countries [14]. The only way out to get rid of such discrepancies seems to be the clarity in curriculum regarding assessment Table of Assessment (TOS) planned for trainees of each year. This strategy would facilitate the trainees not only in attaining the relevant theoretical knowledge but will also prove useful in acquisition of intended clinical competencies.

Overall score attained by trainees of MD Medicine & Allied programs was almost equal to that of MS Surgery & Allied in present study as shown in Fig 2. However, the difference in mean theory and assessment scores of both groups of trainees had statistically significant difference (table 6). Medicine & Allied trainees had comparatively deficient theoretical knowledge while those of Surgery & Allied had relatively inadequate clinical competencies. A systematic review by Pakkasjarvi N et al highlighted the need of competence-based training in all surgical disciplines [15]. Mean OSCE score of Surgery & Allied trainees during in-training assessment-1st year was 60.74 ± 3.8 while those of Medicine & Allied trainees was 67.3 ± 8.2. Although University Residency Program at RMU & Allied hospitals is based on Competency Based Medical Education (CBME) framework given by Accreditation Council for Graduate Medical Education (ACGME) [16]; the acquisition of the trainees with the required medical knowledge and clinical skills is a fact that should be deliberated and strategized by all stakeholders including institutional curriculum committee members and Deans for improvement and to bring the standard of our Higher Education Institutes (HEI) in par with international standards as specified by World Federation for Medical Education [17].

On detailed analysis of MD Medicine & Allied results, OSCE scores were comparatively better than those of theory (Table 2). This elucidates the need for more concentration on studying books, giving presentations and literature search for knowing subject related practices and advancements globally. According to Nagasaki Y et al, General Medicine in-training examination (GM-ITE) was perceived quite beneficial in determining clinical knowledge acquisition among Japanese postgraduate residents. This examination was intended to upgrade the training programs of the country. The study also verified positive correlation between GM-ITE and PLAB-I scores of those residents [18]. Degree Awarding Institutes (DAIs) across the world are not only responsible for taking examinations and awarding degrees but also meant to revolutionize the educational systems and training programs [19]. Contrary to MS Medicine & Allied residents, MS Surgery & Allied trainees scored better in theory than that of OSCE (Table 3). According to Harden et al, OSCE is a valid and beneficial tool for enhancing clinical competencies of the medical students as they go through the practice of history taking, clinical examination, counseling and case management in controlled environment during specified time frame [20]. Apart from assessing undergraduate medical students, OSCE has extensively been practiced for assessing diverse clinical competencies among postgraduate residents and the candidates appearing in licensing exams [21]. Despite the need for multiple resources to arrange 10-15 stations of OSCE, its complexity and being time-intensive activity, OSCE is still perceived as a valid and reliable tool and hence the gold standard for measuring clinical performance. It has been perceived optimistically by both examiners and students [22]. Latest research by Huang T et al among medical postgraduates emphasized the significance of team, case, lecture and evidence-based learning strategies in augmentation of clinical and research related competencies than those of lecture base learning alone [23]. A cross-sectional survey by Alam L et al pointed out many loopholes in competency-based training programs of Pakistan that should stringently be addressed by our policy makers for improvement [24]. Although short intensive preparatory courses have frequently been arranged on 6 monthly bases in addition to regular execution of 360-degree evaluation of university residents and their workplace-based assessments; in my opinion clinical assessments of trainees by employing multiple assessment tools like OSCE, long case, short case etc. at departmental level on monthly basis can prove advantageous in revealing the desired outcomes.

Conclusion & Recommendations

There is dire need to boost medical knowledge and clinical skills by trainees of MD Medicine & Allied and MS Surgery & Allied respectively. Mock exams and preparatory drills should frequently be carried out at Deanery or departmental level to overcome the deficiencies.

Declarations

Conflicts of Interest

The authors declare no conflict of interest.

Source of Funding

The author(s) received no financial support for the research, authorship and or publication of this article.

References

- Kime S. (2021). Evidence Based Education. Connecting curriculum and assessment.

Publisher | Google Scholor - Ismail MA-A, Mat Pa MN, Mohammad JAM, Yusoff MSB. (2020). Seven steps to construct an assessment blueprint: a practical guide. Education in Medicine Journal, 12(1):71-80.

Publisher | Google Scholor - Mikre F. (2011). The roles of assessment in curriculum practice and enhancement of learning. Ethiop J Educ & Sc, 5(2):101-114.

Publisher | Google Scholor - James D. (2000). Making the graduate. Perspectives on student experience of assessment in higher education. In Ann Filer 2003; Assessment: Social practice and social product. London: Rutledge, 151-167.

Publisher | Google Scholor - Schuwirth LW, van der Vleuten CP. (2011). Programmatic assessment: From assessment of learning to assessment for learning. Med Teach, 33(6):478-485.

Publisher | Google Scholor - Al_Eyd G, Achike F, Agarwal M, Atamna H, Atapattu DM, Castro L, et al. (2018). Curriculum mapping as a tool to facilitate curriculum development: a new School of Medicine experience. BMC Med Educ, 18:185.

Publisher | Google Scholor - College of Physicians & Surgeons Pakistan. (2019). CPSP National Residency Program: Competency Model based Residency Program.

Publisher | Google Scholor - Harvey EJ. (2011). Royal College White Papers: Assessment of training. J Can Chir, 54:3.

Publisher | Google Scholor - Ringsted C, Pallisgaard J, Ostergaard D, Scherpbier A. (2004). The effect of in-training assessment on clinical confidence in postgraduate education. Med Educ, 38(12):1261-1269.

Publisher | Google Scholor - Dijksterhuis MGK, Schuwirth LWT, Braat DDM, Teunissen PW, Scheele F. (2013). qualitative study on trainees’ and supervisors’ perceptions of assessment for learning in postgraduate medical education, Medical Teacher. 35(8):e1396-e1402.

Publisher | Google Scholor - Umar M, Khan JS, Shahid R, Khalid R. (2022). University Residency Program.

Publisher | Google Scholor - Witheridge A, Ferns G, Scott-Smith W. (2019). Revisiting Miller's pyramid in medical education: the gap between traditional assessment and diagnostic reasoning. Int J Med Educ, 10:191-192.

Publisher | Google Scholor - Tolsgaard MG, Rasmussen MB, Tappert C, Sundlers M, Sorensen JL, Ottesen B, et al. (2014). Which factors are associated with trainees’ confidence in performing obstetric and gynecological ultrasound examinations? Ultrasound Obstet Gynecol, 43:444-451.

Publisher | Google Scholor - Salvesen KA, Lees C, Tutschek B. (2010). Basic European ultrasound training in obstetrics and gynecology: where are we and where do we go from here? Ultrasound Obstet Gynecol, 36(5):525-529.

Publisher | Google Scholor - Pakkasjarvi N, Anttila H, Pyhalto K. (2024). What are the learning objectives in surgical training – a systematic literature review of the surgical competence framework. BMC Med Educ, 24:119.

Publisher | Google Scholor - Edgar L, McLean S, Hogan SO, Hamstra S, Holmboe ES. (2020). ACGME: The Milestone Guidebook.

Publisher | Google Scholor - World Federation for Medical Education (WFME). (2015). Postgraduate Medical Education: WFME Global Standards for Quality Improvement.

Publisher | Google Scholor - Nagasaki K, Nishizaki Y, Nojima M, Shimizu T, Konishi R, Okubo T, et al. (2021). Validation of General Medicine in-training examination using the professional and linguistic assessments board examination among postgraduate residents in Japan. Int J General Medicine, 14:6487-6495.

Publisher | Google Scholor - Rawalpindi Medical University. RMU Act 2017 (Act XVI of 2017), Government of Punjab.

Publisher | Google Scholor - Harden RM, Stevenson M, Downie WW, Wilson GM. (1975). Assessment of clinical competence using objective structured examination. Br Med J, 1:447-451.

Publisher | Google Scholor - Sloan D, Donnelly MB, Schwartz R, Strodel W. (1995). The objective structured clinical examination – the new gold standard for evaluating postgraduate clinical performance. Ann Surg, 222(6):735-742.

Publisher | Google Scholor - Majumder MAA, Kumar A, Krishnamurthy K, Ojeh N, Adams OP, Sa B. (2019). An evaluative study of objective structured clinical examination (OSCE): students and examiners perspectives. Adv Med Educ Pract, 10:387-397.

Publisher | Google Scholor - Huang T, Zhou S, Wei Q, Ding C. (2024). Team-, case-, lecture- and evidence-based learning in medical postgraduates training. BMC Med Educ, 24:675.

Publisher | Google Scholor - Alam L, Alam M, Shafi MN, Khan S, Khan ZM. (2022). Meaningful in-training and end-of-training assessment: The need for implementing a continuous workplace-based formative assessment system in our training programs. Pak J Med Sci, 38(5):1132-1137.

Publisher | Google Scholor